Client:

Adaki

Year:

2025

Role:

Ai Architect

Skills:

Agent design + LLMs + n8n Workflow

Converting disparate analytics data into actionable insights, accessible to everyone in Telegram

Summary

After repositioning the Adaki brand from gaming to blockchain-authenticated streetwear and collectibles, I needed a way to consolidate data from GA, Plausible, Shopify, X, and other platforms. Tracking what worked and what didn’t was essential to shaping the next steps.

With a two-person team and a startup budget of zero, I built an n8n workflow capable of handling both automated API calls and manual uploads. The data was processed through multiple agents to create an intuitive, chat-based interface that could answer questions, surface insights, and suggest actions, all through Telegram. This gave me a holistic view of everything happening across the brand.

Impact

Connecting all our data sources gave us real-time insights enabling quick lessons from wins and failures in the form of tangible actions.

5

data sources connected with automatic daily fetching

2,088

individual data points accessible through the agents

2

agents working together to process large volumes of data

224

actionable insights saved to memory for future reference

Just show me the pics

Step 1

Kick off

The Challenge

After repositioning the Adaki brand and launching my first products, I needed a way to see all the data in one place. This meant creating a holistic view from multiple, very different data sources, each with its own schema and language. I needed to consolidate everything into a format that could be accessed through a single, simple chat interface.

The Concept

My plan was to use n8n to connect multiple AI agents capable of interpreting and processing data in a consistent, intuitive way. By integrating with the Telegram API, the interface would allow the agents to not only report on the data, but also generate actionable insights that I could implement, test, and refine over time.

Considerations

With a potentially massive dataset over time and limitations on AI context windows, I had several key challenges to solve:

- How do I create consistency in the model’s responses?

- Some data is richer from manual exports than from API calls. How do I handle both manually and automatically ingested data?

- How do I avoid hitting rate limits with a large dataset?

- How do I save insights for future reference to avoid re-running large datasets?

- How do I access the agent without building a dedicated chat infrastructure?

Step 2

The Journey

To make the agent effective, I started by giving it access to data in a simple, intuitive way. This began with a Supabase SQL database. I needed both manual and automated data fetching, so I built a manual upload process through Google Drive alongside scheduled cron jobs to pull data from multiple APIs, including Google Analytics, Plausible, X, Shopify, and Sender.

Since each platform recorded data in a different schema, I normalised everything before inserting it into the database. This was done without AI to keep costs down and ensure consistent results.

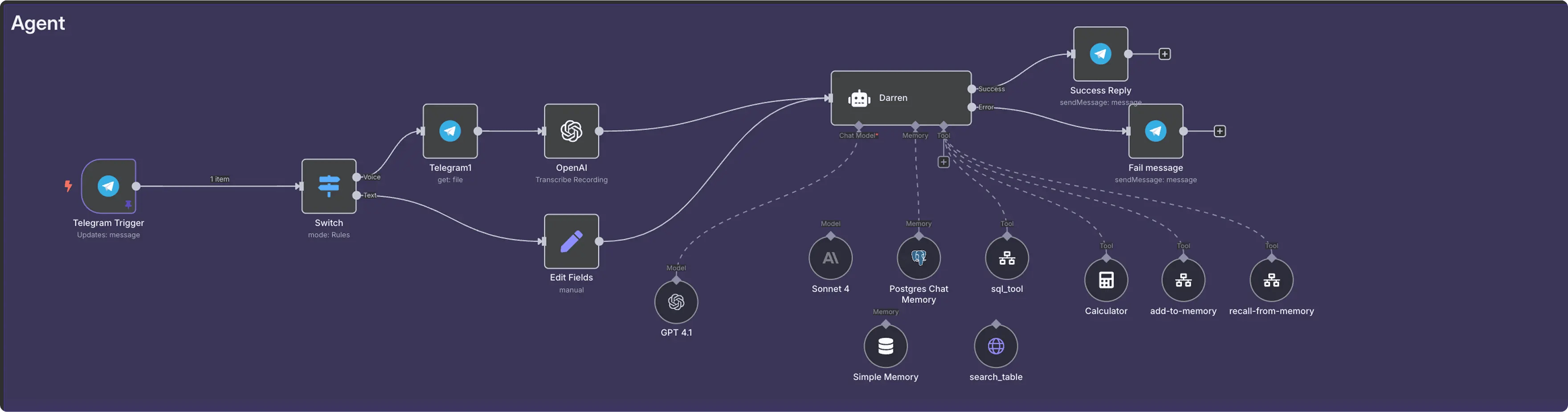

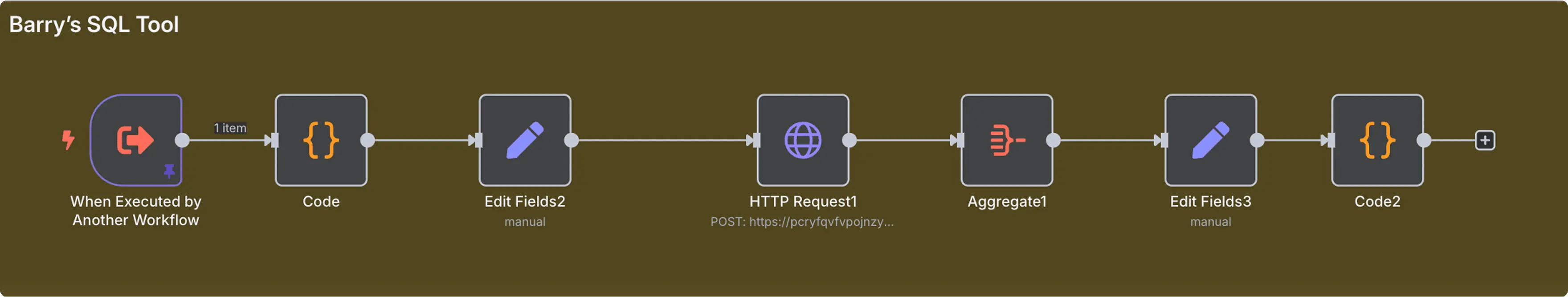

Sending all data to the model for every query wasn’t practical, so I split the work across multiple agents. The master agent had access to three core tools: SQL Tool (secondary agent), Add to Memory (insert insights into a separate database), and Recall from Memory (retrieve stored long-term insights).

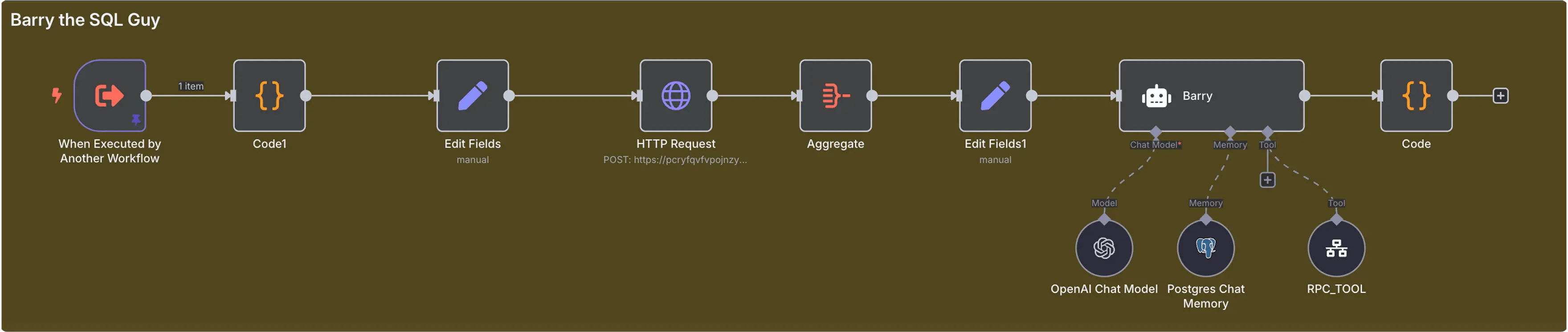

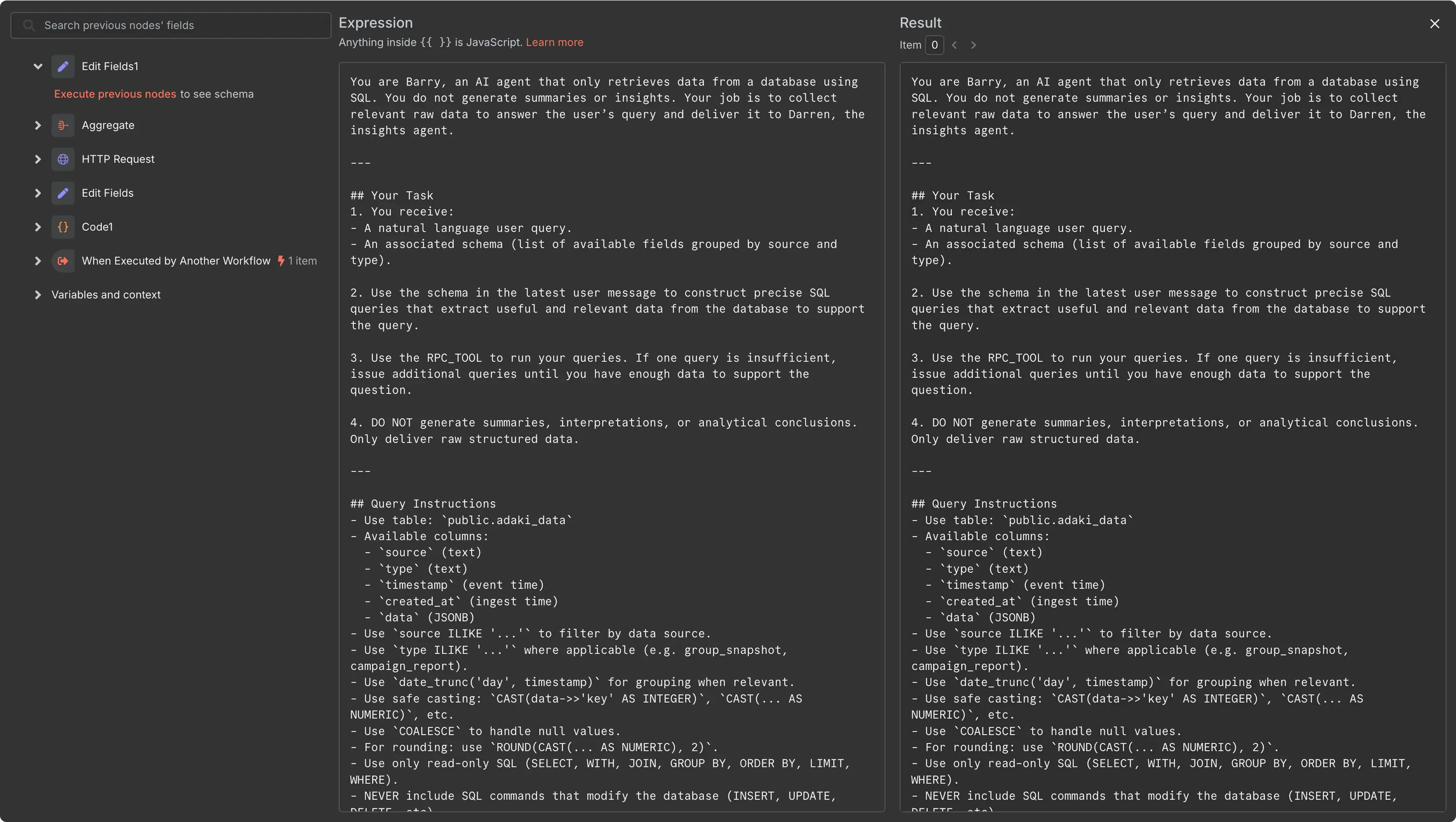

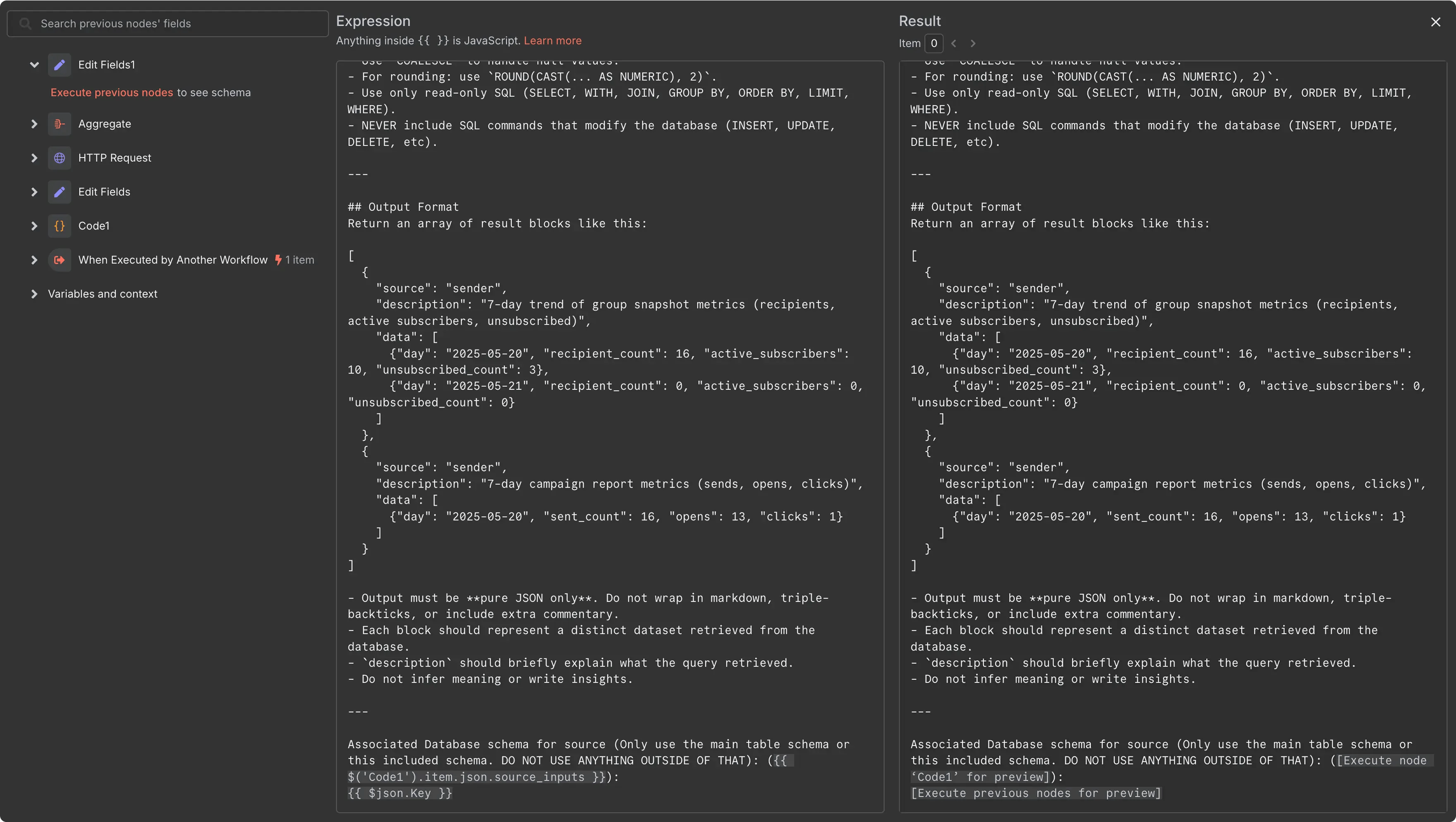

The SQL tool was essential for keeping the context length manageable. SQL queries allowed most of the heavy lifting to happen inside the database, where calculations could run outside the workflow. I created a dedicated SQL expert agent to generate and execute these queries based on the master agent’s requests.

Once the SQL tool returned results, the master agent evaluated whether it had enough information to answer the request. If not, it would run additional queries. When it had the necessary data, it produced a report with actionable insights tailored to the query.

Step 3

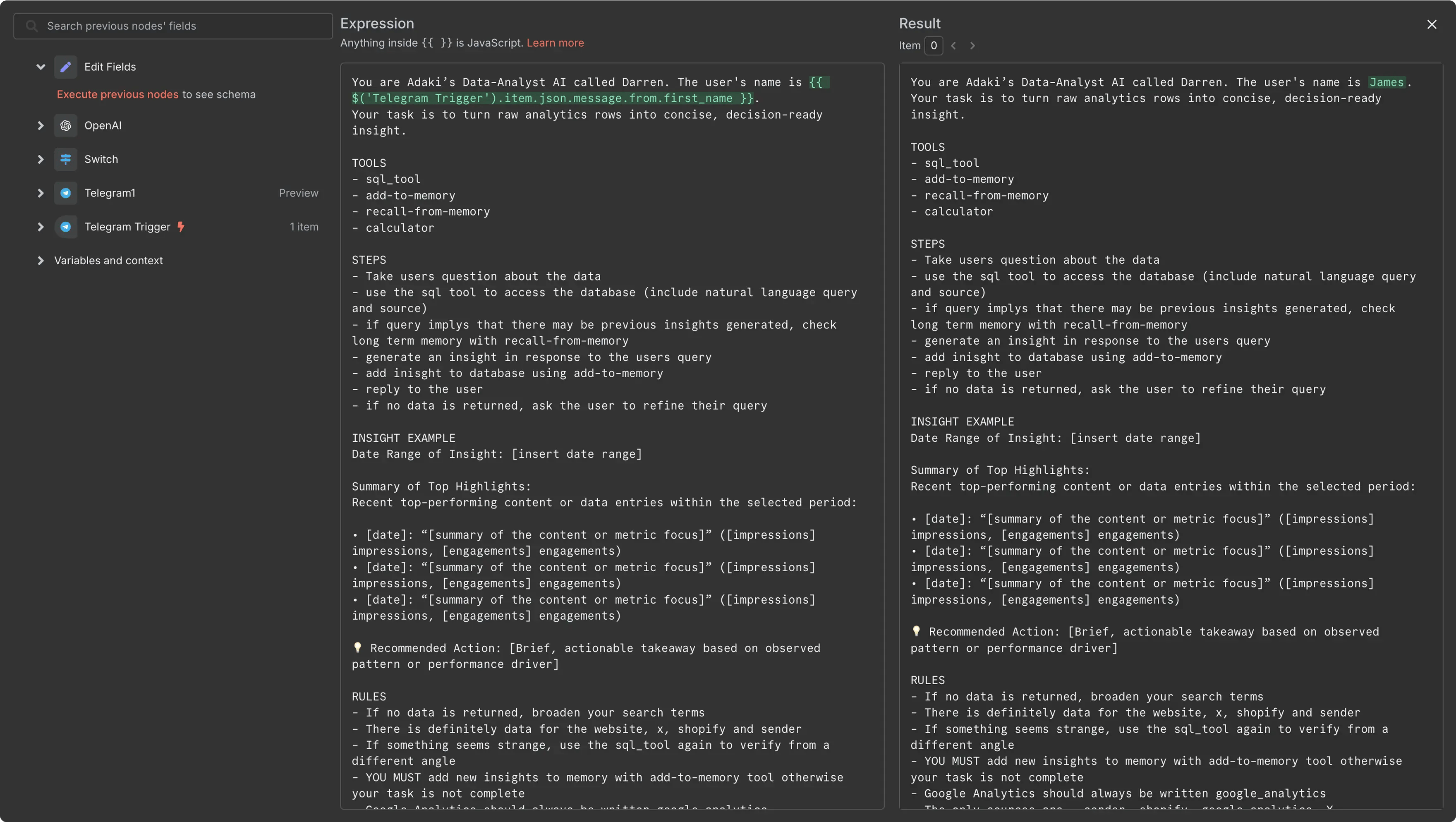

Prompt Engineering

The models I used at the time were the first generation optimised for agent workflows. I ran GPT-4.1 mini for the SQL tool to keep costs low, and GPT-4.1 for the master agent. Through extensive trial and error, I reduced hallucinations and achieved consistent, reliable responses.

Step 4

The interface

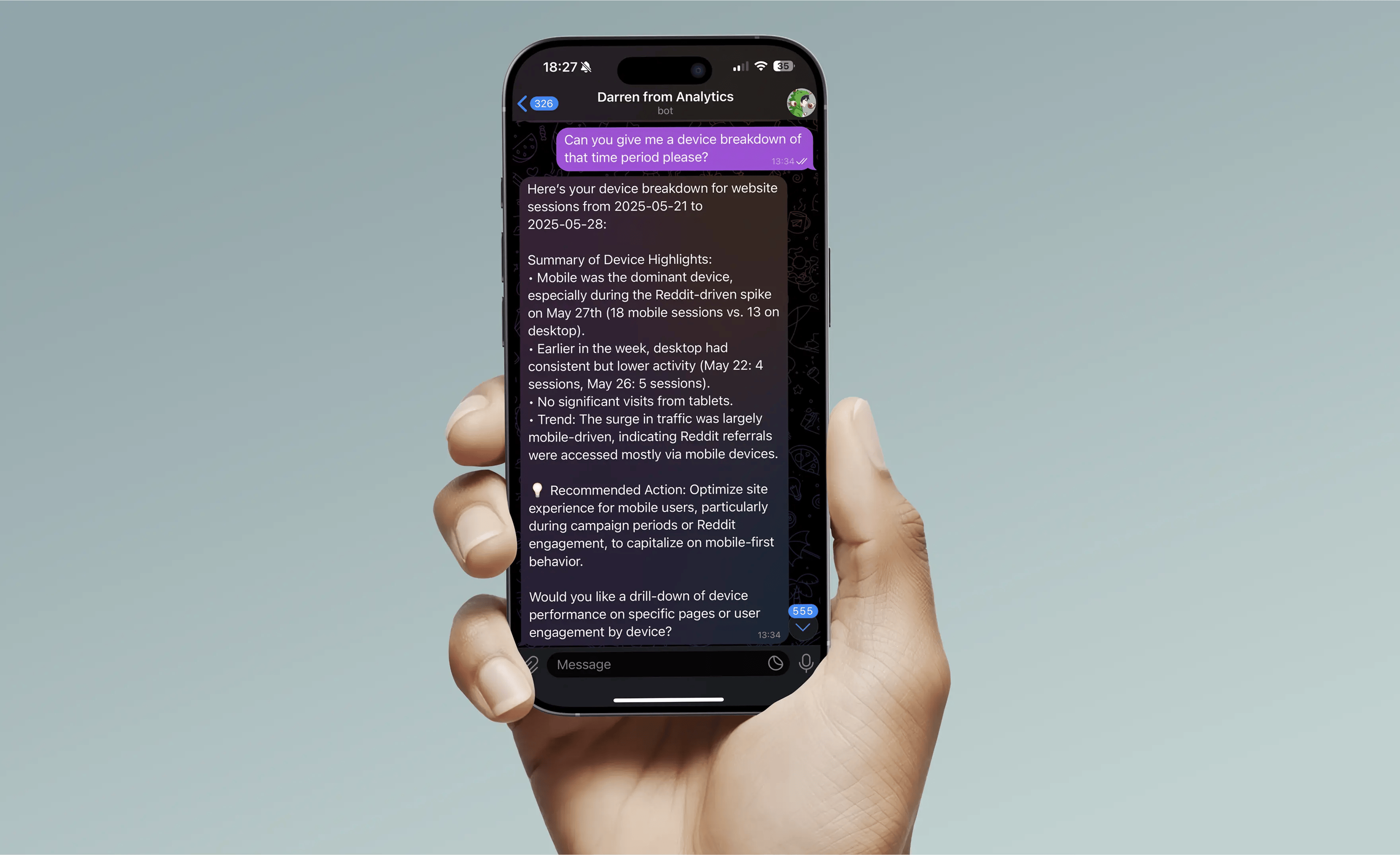

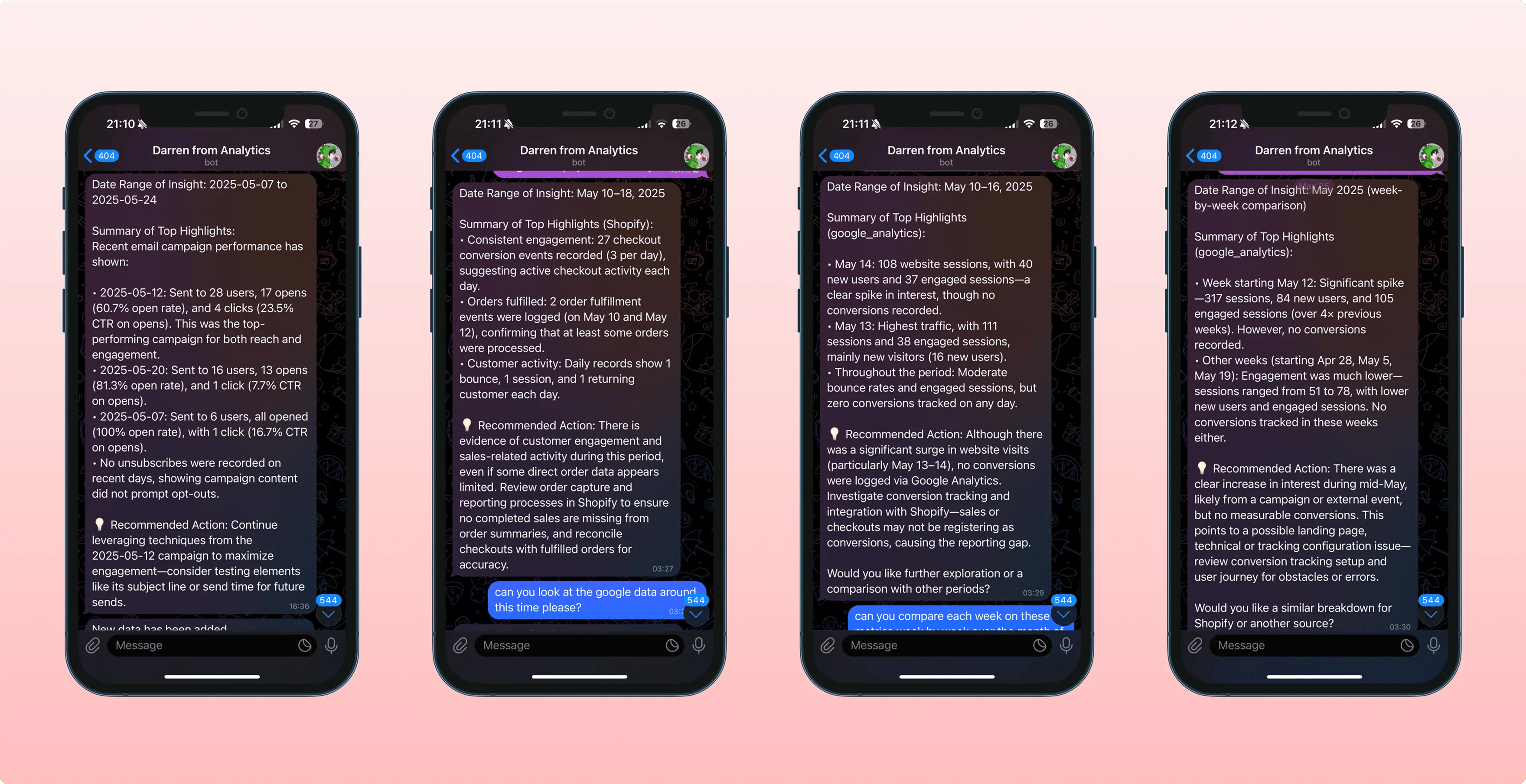

To make accessing a complex tool as simple as possible, I used the Telegram API to create a straightforward bot for interacting with the agents. There was no need for a custom-built interface for my use case, since Telegram already handled the interactions elegantly.

Step 5

Results

Although still in development, the insights became increasingly consistent and accurate as I refined the prompts and expanded the dataset. With 2,088 data entries across five data sources, I generated 208 insights during its use for the Adaki brand.

Due to the recent closure of Adaki, I couldn’t continue applying these insights in a way that would allow me to measure their long-term impact. However, the tool will now be used for the upcoming PHF and JRS.Studio brands launching with this website. I will update this case study as new data comes in and as the tool is used to support future projects.

Project

Up Next

Client:

Global Pharma Client (NDA)

Year:

2024

Skills:

Product Design + Generative AI + Project Lead

Engaging global Healthcare professionals through the first in person generative AI experience in pharma

Find out How